User Tools

Site Tools

You are here: バーチャルキャスト公式Wiki TOP » VirtualCast Official Wiki Top » VirtualCast TOP » Controller TOP » VIVE Facial Tracker

en:virtualcast:controller:facial-tracker

VIVE Facial Tracker

You can use VIVE Facial Tracker to track the movement of the mouth.

* To apply the mouth movement to any VRM format models, VirtualCast combines five BlendShapes (A, I, U, E and O) from the preset BlendShapes of the VRM format to create corresponding mouth shapes.

As a prerequisite, you need to have SR_Runtime installed and the lip module must be recognized properly.

* When the lip module is recognized.

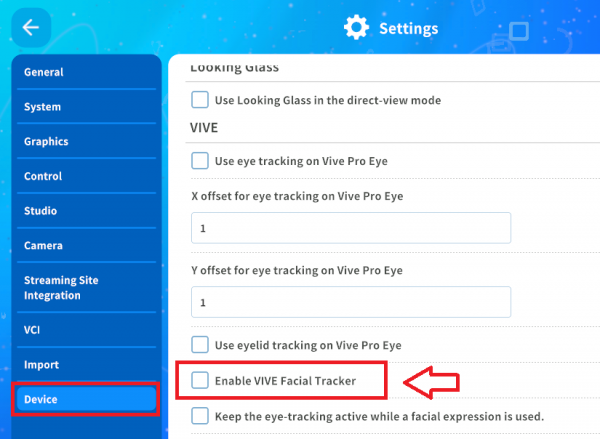

Settings in Virtual Cast

You need to change a setting from the settings in the title screen.

Turn on the check box: [Device] > [VIVE] > [VIVE Facial Tracker].

When everything is set properly, the lip module will be activated during the calibration and the tracking of the mouth will start.

en/virtualcast/controller/facial-tracker.txt · Last modified: 2021/04/15 16:13 by h-eguchi